Examples of A1 following human commands in natural language

SayTap: Language to Quadrupedal Locomotion

Large language models (LLMs) have demonstrated the potential to perform high-level planning. Yet, it remains a challenge for LLMs to comprehend low-level commands, such as joint angle targets or motor torques. This work proposes an approach to use foot contact patterns as an interface that bridges human commands in natural language and a locomotion controller that outputs these low-level commands. This results in an interactive system for quadrupedal robots that allows the users to craft diverse locomotion behaviors flexibly. We contribute an LLM prompt design, a reward function, and a method to expose the controller to the feasible distribution of contact patterns. The results are a controller capable of achieving diverse locomotion patterns that can be transferred to real robot hardware. Compared with other design choices, the proposed approach enjoys more than 50% success rate in predicting the correct contact patterns and can solve 10 more tasks out of a total of 30 tasks.

Method

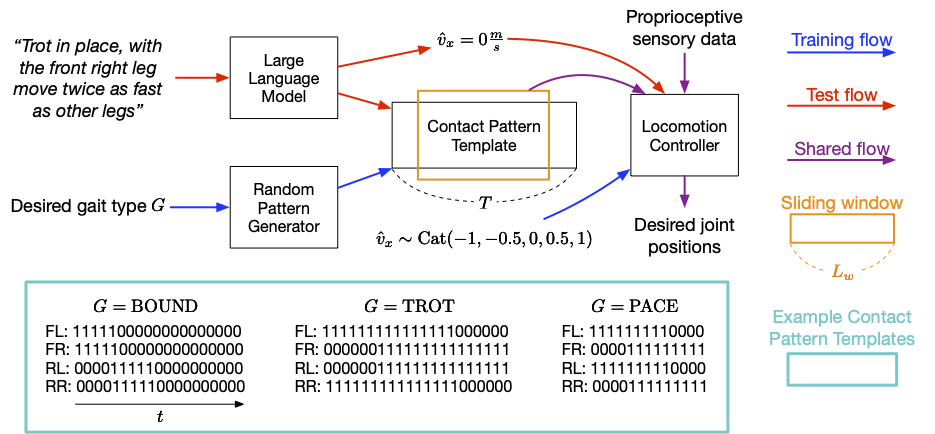

The core ideas of our approach include introducing desired foot contact patterns as a new interface between human commands in natural language and the locomotion controller. The locomotion controller is required to not only complete the main task (e.g., following specified velocities), but also to place the robot's feet on the ground at the right time, such that the realized foot contact patterns are as close as possible to the desired ones, the following figure gives an overview of the proposed system. To achieve this, the locomotion controller takes a desired foot contact pattern at each time step as its input, in addition to the robot's proprioceptive sensory data and task related inputs (e.g., user specified velocity commands). At training, a random generator creates these desired foot contact patterns, while at test time a LLM translates them from human commands.

In this paper, a desired foot contact pattern is defined by a cyclic sliding window of size that extracts the four feet ground contact flags between and from a pattern template and is of shape . A contact pattern template is a matrix of '0's and '1's, with '0's representing feet in the air and '1's for feet on the ground. From top to bottom, each row in the matrix gives the foot contact patterns of the front left (FL), front right (FR), rear left (RL) and rear right (RR) feet. We demonstrate that the LLM is capable of mapping human commands into foot contact pattern templates in specified formats accurately given properly designed prompts, even in cases when the commands are unstructured and vague. In training, we use a random pattern generator to produce contact pattern templates that are of various pattern lengths , foot-ground contact ratios within a cycle based on a given gait type , so that the locomotion controller gets to learn on a wide distribution of movements and generalizes better.

Please check out our paper for more details.